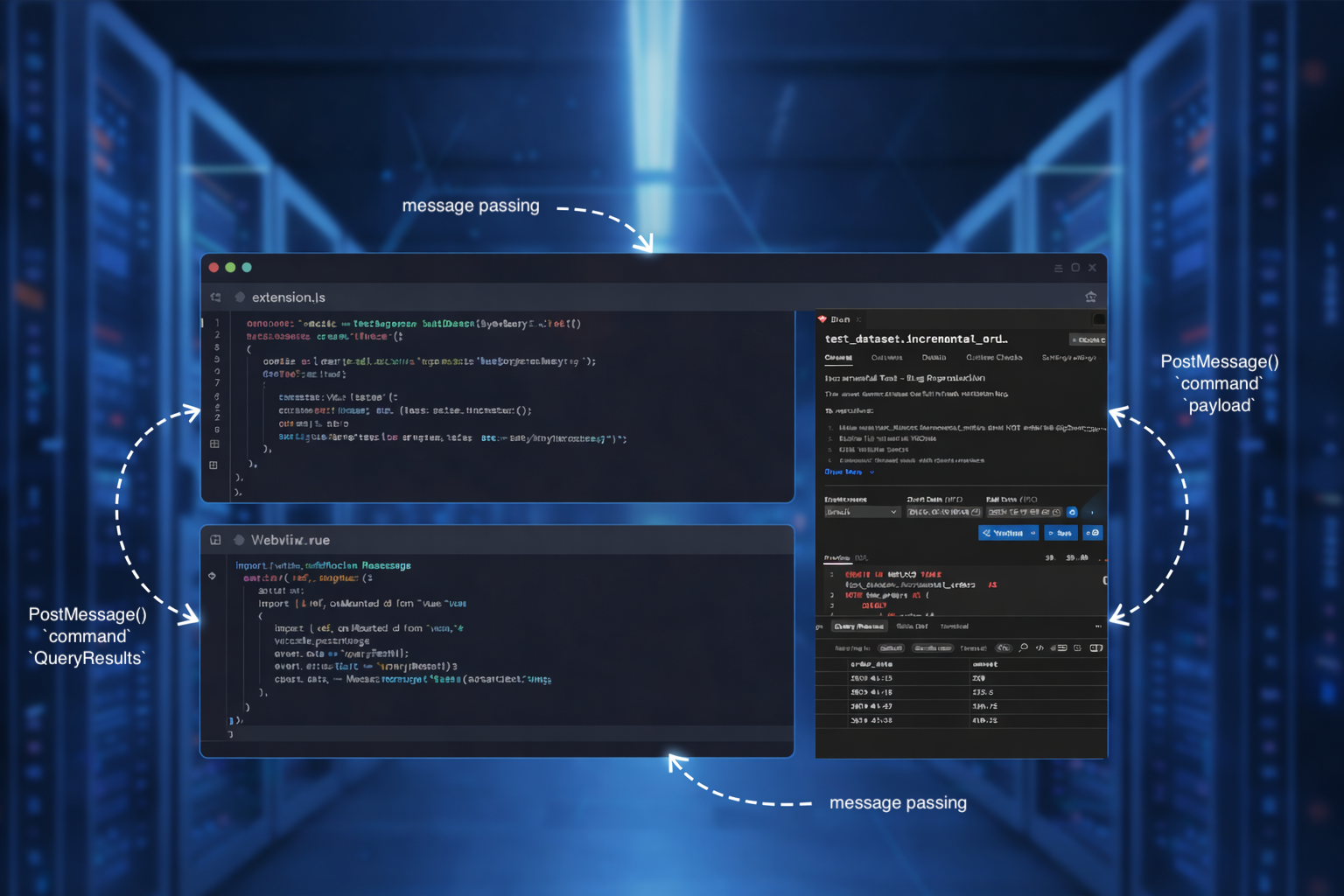

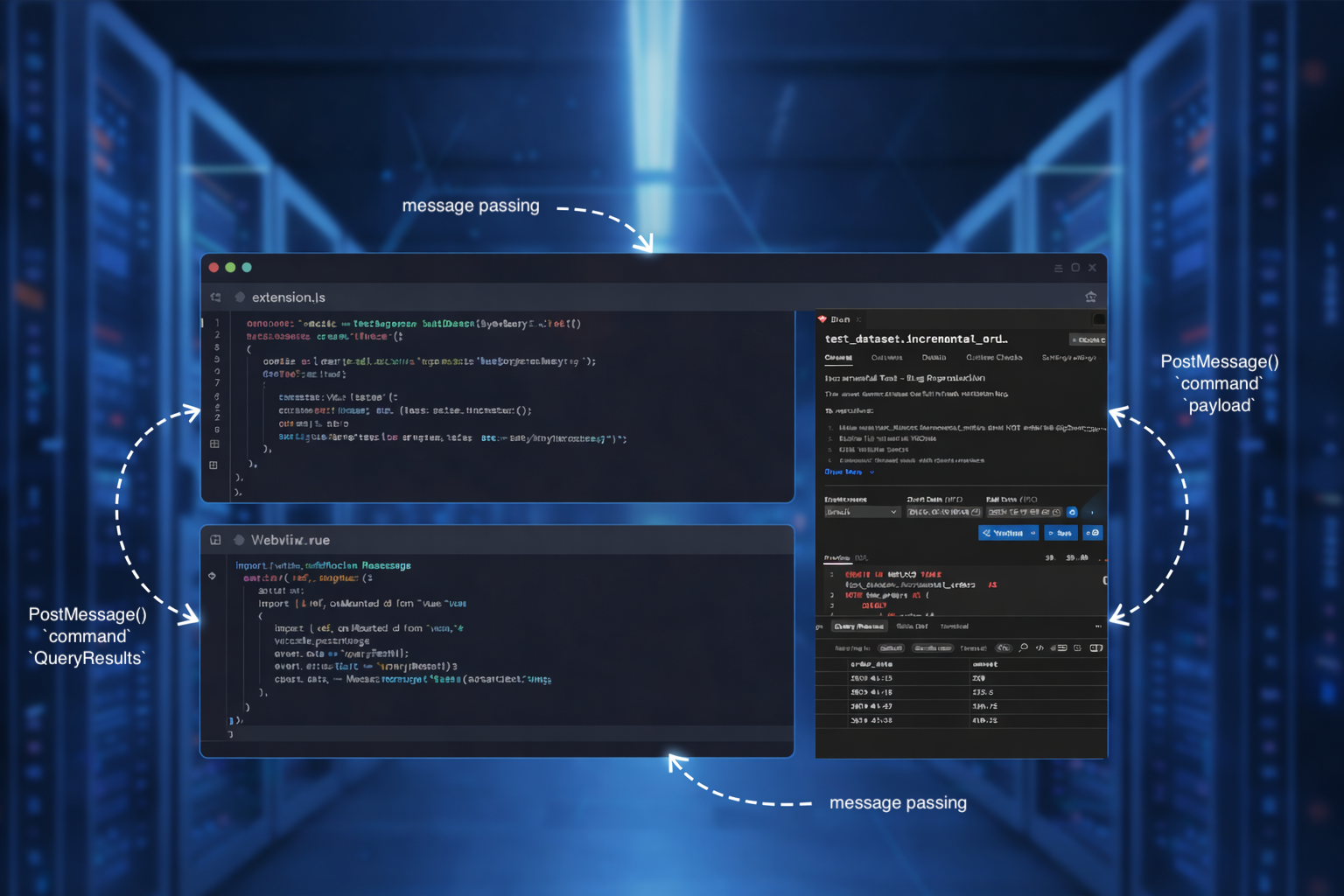

Bruin VS Code Extension: The Architectural Challenge of Integrating Vue.js Webviews

How we built a rich, interactive VS Code extension using Vue.js webviews, bridging Node.js extension code with a modern frontend through message passing.

Bruin now supports the Model Context Protocol, letting AI agents in Cursor, Claude Code, and other editors query databases, ingest data, compare tables, and build pipelines—all through natural language.

Burak Karakan

Co-founder & CEO

We're excited to announce that Bruin CLI now supports the Model Context Protocol (MCP), bringing the full power of Bruin's data capabilities to your AI editor.

With Bruin MCP, you can ask your AI agent to query databases, ingest data from dozens of sources, compare tables across environments, build complete pipelines, and more—all without leaving your editor.

The Model Context Protocol is an open standard that lets AI agents interact with external tools and data sources. Think of it as a universal adapter that connects AI models to real-world systems.

Rather than just generating code suggestions, MCP-enabled AI agents can:

In short, MCP transforms AI assistants from code generators into active participants in your workflow.

Bruin has been built with a few design decisions in mind:

These design decisions were made to make humans productive with data. All of our tooling, product decisions, and engineering efforts are focused on increasing productivity of data teams.

When the AI agents came into our lives earlier this year, it became apparent to us that the investment we made in making humans productive would also benefit the AI agents. This was a fundamental shift in our thinking. The agents would soon become our partners in productivity, and data teams would need to benefit from this as well.

The fact that we built everything as code, and as a CLI tool, made it easy to bring AI agents into the game. This is why we built Bruin MCP.

By bridging Bruin CLI with MCP-compatible editors like Cursor, Claude Code, and Codex, we're enabling a fundamentally different way to work with data: conversational data engineering.

In its core, MCP is a simple protocol: it exposes tools, and AI agents call these tools. The idea is that external systems would have their own MCP servers, and the AI agents would interact with these systems without having to navigate UI, or learn new commands.

While this abstraction might work for some, we ran into a fundamental question: does MCP even make sense for CLI tools?

Think about it for a minute: CLI tools are designed to be executed in a terminal, and the output is typically text. The AI agents are really good at understanding text, and executing shell commands. Why do we need MCP for CLI tools?

There's another challenge: Bruin CLI has quite a lot of functionality, which means that for all of our high-level commands, as well as their subcommands, we would need to implement separate tools. This would be a lot of work, and would also fill the context window of the AI agents quickly.

Our first version of making AI agents use Bruin CLI was to use the AGENTS.md files. In these files, we would manually describe a few Bruin CLI commands, and how to use them. This was a quick and dirty way to get started, which allowed us to bring AI into Bruin workflows quickly. While this worked nicely, this meant that every command would need to be manually described, and the AI agents would need to learn the syntax of the commands. This was not fit for going to production.

While playing around with different AI agents, an idea popped into our heads: AI agents are really good at navigating files and directories, and executing commands in a terminal. Why not use this to our advantage?

We have a decent documentation for our CLI, and the documentation is already in markdown format. Why not use this to our advantage? Could we make the MCP server read the documentation, and execute the commands based on the documentation?

This thought process led us to the current design of Bruin MCP, which consists of 3 simple tools:

bruin_get_overview: Tell the agent the basics of Bruin, what it does, and how to use it.bruin_get_docs_tree: Get the tree of the documentation, and the commands available.bruin_get_doc_content: Read the content of a specific documentation file.This means that the AI agent can navigate the documentation by itself, and execute the CLI commands based on the documentation using the shell.

The beauty of this approach is that it is incredibly simple, flexible, and very easy to maintain. Every new feature we document is automatically available to the AI agent. Any agent that can call tools can immediately start using Bruin MCP. All without us having to maintain a separate tool for each command.

This is more of an opinionated approach, and we're open to feedback on this. At this point, it feels like MCP servers does not really make much sense for CLI tools, other than just exposing documentation about the tool itself.

Bruin allows you to build end-to-end pipelines, which means any data engineering task you have can be (partially?) automated with AI agents using Bruin MCP.

Ask your AI agent to run SQL queries across PostgreSQL, BigQuery, Snowflake, DuckDB, and many more platforms:

"What's the total revenue by product category this quarter?"

Your AI agent connects to your configured databases, runs the query, and shows you the results—no context switching required. It uses bruin query commands to run queries using the pre-configured connections you have defined.

Bruin supports 60+ data sources through our open-source ingestion framework. With MCP, you can trigger ingestion with plain English:

"Bring all my Shopify order data from last week into BigQuery."

The AI agent writes the ingestion config, executes it, and confirms when the data is loaded. What used to take 30 minutes of reading docs and writing config now takes 30 seconds of typing a sentence. It uses ingestr assets for Shopify, Stripe, Google Sheets, and many more sources.

Bruin allows you to define your SQL data assets as code, and version them. With MCP, you can update the SQL data assets with plain English:

"Update the users table to add a new column for the user's phone number, and set it to required."

The AI agent updates the SQL data asset, and confirms when the data is loaded.

One of the hardest parts of data pipeline development is validating that your changes produce the correct output. Bruin's built-in data-diff command makes this easy, and MCP makes it trivial:

"Compare the users table between dev and prod."

The AI agent runs the comparison, highlights differences, and helps you diagnose discrepancies—all in a conversational thread.

Bruin MCP has access to up-to-date Bruin documentation, meaning your AI agent can scaffold entire pipelines based on best practices:

"Create a pipeline that ingests Stripe data daily, calculates MRR by plan, and runs freshness checks."

The agent creates the pipeline structure, writes SQL transformations, adds quality checks, and even suggests materialization strategies—then validates the pipeline before you run it.

No more switching to a browser to search docs:

"How do I set up a Snowflake connection in Bruin?"

The AI agent fetches the relevant documentation, shows you the configuration format, and can even add the connection for you if you provide credentials.

Setting up Bruin MCP takes less than a minute.

If you haven't already:

curl -LsSf https://getbruin.com/install/cli | sh

Or follow the installation guide for other platforms.

claude mcp add bruin -- bruin mcp

Go to Settings → MCP & Integrations → Add Custom MCP and add:

{

"mcpServers": {

"bruin": {

"command": "bruin",

"args": ["mcp"]

}

}

}

Add to ~/.codex/config.toml:

[mcp_servers.bruin]

command = "bruin"

args = ["mcp"]

Initialize a Bruin project (or use an existing one):

bruin init

cd my-project

Now you can ask your AI agent anything:

Check out the full documentation for more examples and setup details.

Bruin MCP is just the beginning. We're exploring:

Bruin MCP is available now, completely free and open source. Install Bruin CLI, configure your editor, and start building data workflows through conversation.

We'd love to hear your feedback. What workflows do you want to automate? What could your AI agent do better? Let us know by opening an issue or joining the conversation in Slack.

Happy building!

How we built a rich, interactive VS Code extension using Vue.js webviews, bridging Node.js extension code with a modern frontend through message passing.

dbt only handles transformations, leaving you with a complex stack. Bruin provides end-to-end pipelines with data ingestion, SQL & Python transformations, quality checks, and built-in orchestration—all in one open-source tool.

Moving the Firebase data to BigQuery is a great way to get more out of your data, and here's how to do it.