If you're building data pipelines in 2026, you've likely heard of dbt (data build tool). It's become the de facto standard for SQL transformations in the modern data stack. But here's the problem: dbt only solves one piece of the puzzle.

What if you could get ingestion, transformation, quality checks, and orchestration—all in one tool? That's exactly what Bruin delivers. Let's dive into why an end-to-end approach beats cobbling together multiple tools.

dbt focuses exclusively on transformation. It takes data that's already in your warehouse and transforms it using SQL (and limited Python). That's it. Everything else—getting data into your warehouse, scheduling pipelines, monitoring quality—requires additional tools.

A typical dbt stack looks like this:

- Ingestion tool (Fivetran, Airbyte, or custom scripts)

- dbt for transformations

- Orchestrator (Airflow, Dagster, Prefect, or dbt Cloud)

- Observability tools (Monte Carlo, dbt Cloud)

- Catalog/Lineage tools (separate or dbt Cloud)

That's 3-5 different tools to manage, configure, and integrate. Each one requires its own authentication, monitoring, and maintenance.

Bruin takes a different approach: everything you need in a single, unified framework.

With Bruin, you get:

- ✅ Data ingestion (100+ connectors via ingestr)

- ✅ SQL & Python transformations (both first-class citizens)

- ✅ Built-in orchestration (no Airflow needed)

- ✅ Data quality checks (blocking by default)

- ✅ Column-level lineage (built-in, even locally)

- ✅ VS Code extension (visual lineage and execution)

One tool. One CLI. One configuration format. One deployment.

dbt doesn't handle data ingestion. You need to solve this yourself with:

- Fivetran - Expensive SaaS solution ($$$$)

- Airbyte - Open-source but requires deployment and maintenance

- Custom Python scripts - Flexible but high maintenance burden

- Cloud-native tools - AWS Glue, Azure Data Factory (vendor lock-in)

Each solution comes with its own complexity: separate infrastructure, different authentication systems, and coordination headaches between tools.

Bruin's ingestion engine is built on ingestr, an open-source data ingestion tool with 100+ connectors. Define your ingestion with simple YAML:

name: raw.users

type: ingestr

parameters:

source_connection: postgresql

source_table: 'public.users'

destination: bigquery

incremental_strategy: merge

incremental_key: updated_at

primary_key: id

That's it. No separate infrastructure. No complex configuration. Just YAML.

Bruin supports multiple incremental loading strategies out of the box:

1. Append - Add new rows without touching existing data

incremental_strategy: append

incremental_key: created_at

2. Merge - Upsert based on primary keys (updates existing, inserts new)

incremental_strategy: merge

primary_key: id

incremental_key: updated_at

3. Delete+Insert - Delete matching rows, insert new ones

incremental_strategy: delete+insert

incremental_key: date

4. Replace - Full table replacement

incremental_strategy: replace

These strategies ensure you're only moving the data you need, not re-ingesting entire tables every time.

Bonus: Need a custom connector? Bruin offers a 1-week SLA for custom connector development.

This is where the differences become stark.

dbt added Python support (dbt-py) later in its lifecycle, and it shows:

- ❌ Platform-specific - Requires DataFrame API specific to your data warehouse

- ❌ Not all platforms support it - Check if your warehouse even supports Python models

- ❌ Can't easily mix SQL and Python - Awkward workflow when you need both

- ❌ Limited flexibility - Constrained by what your warehouse supports

If you want to use scikit-learn, TensorFlow, or any ML library, you're often out of luck with dbt.

Bruin was built from day one with native Python support:

- ✅ Use any Python library - pandas, numpy, scikit-learn, TensorFlow, PyTorch, whatever you need

- ✅ Isolated environments with uv - Each asset runs in its own environment

- ✅ Mix SQL & Python freely - Build pipelines that flow from SQL to Python and back

- ✅ Cross-language dependencies - SQL assets can depend on Python assets and vice versa

- ✅ Multiple Python versions - Run different Python versions in the same pipeline

Here's a real example:

# assets/ml_predictions.py

"""

@bruin

name: analytics.ml_predictions

type: python

depends:

- analytics.user_features # SQL asset

materialization:

type: table

@bruin

"""

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

def main(connection):

# Load data from the SQL upstream dependency

df = connection.read_sql("SELECT * FROM analytics.user_features")

# Train your model

model = RandomForestClassifier()

# ... model training logic ...

# Return predictions as DataFrame

return predictions_df

This Python asset depends on a SQL asset (analytics.user_features), trains a machine learning model, and outputs results that downstream SQL assets can use. This workflow is nearly impossible in dbt.

Bruin uses uv to manage isolated Python environments for each asset. This means:

- No global dependency conflicts

- Reproducible execution

- Automatic dependency installation

- Different Python versions in the same pipeline

# asset.yml

name: analytics.ml_model

type: python

parameters:

python_version: "3.11"

dependencies:

- pandas==2.0.0

- scikit-learn==1.3.0

- tensorflow==2.14.0

Good news if you're migrating from dbt: Bruin supports Jinja templating too.

-- assets/daily_revenue.sql

/*

@bruin

name: analytics.daily_revenue

depends:

- raw.orders

@bruin

*/

SELECT

DATE_TRUNC('day', created_at) as date,

SUM(amount) as revenue

FROM raw.orders

WHERE created_at >= '{{ start_date }}'

AND status = 'completed'

GROUP BY 1

Bruin supports Jinja templating with:

- Variables and parameters

- Macros for code reuse

- Control structures (if/else, loops)

- Custom filters

You're not losing familiar patterns—you're gaining capabilities.

dbt has no orchestration capabilities. You must use an external tool:

Apache Airflow

- Complex setup and maintenance

- Steep learning curve

- Requires dedicated infrastructure

Dagster

- Modern but still requires separate deployment

- Another tool to learn and manage

Prefect

- Cloud-first approach

- Additional service to maintain

dbt Cloud

- Managed orchestration option

- $$$ - Can get expensive

- Vendor lock-in considerations

Each option means more infrastructure, more authentication to manage, and more tools to integrate.

Bruin has built-in orchestration. No Airflow. No Kubernetes. No complexity.

# Run your entire pipeline locally

bruin run

# Backfill historical data

bruin run --start-date 2024-01-01 --end-date 2024-12-31

Deployment options:

- Local Development -

bruin run on your machine - GitHub Actions - CI/CD integration with single binary

- EC2 / VM - Self-hosted, no dependencies required

- Bruin Cloud - Fully managed with governance and monitoring

The single binary approach means you can deploy anywhere—no Docker, no Kubernetes, no complex orchestration infrastructure.

In dbt, tests are defined separately from transformations:

# schema.yml

models:

- name: users

columns:

- name: email

tests:

- not_null

- unique

Limitations:

- Tests don't run automatically—you must execute

dbt test - Not part of the pipeline execution flow

- Failures don't prevent downstream models from running

- Separate mindset: "build models, then test"

In Bruin, quality checks are embedded in your asset definitions and run automatically after each transformation:

# assets/users.sql

name: analytics.users

type: sql

materialization:

type: table

columns:

- name: email

type: string

checks:

- name: not_null

- name: unique

- name: revenue

type: float

checks:

- name: positive

- name: country_code

type: string

checks:

- name: accepted_values

value: ['US', 'UK', 'CA', 'DE', 'FR']

Key advantages:

- ✅ Blocking by default - Bad data can't proceed to downstream assets

- ✅ Automatic execution - Runs after every transformation

- ✅ Works on all asset types - Ingestion, SQL, Python

- ✅ Custom SQL checks - Define any validation logic you need

- ✅ Optional non-blocking mode - For monitoring without stopping pipelines

Custom checks example:

custom_checks:

- name: revenue_matches_sum

query: |

SELECT COUNT(*) FROM analytics.users

WHERE total_revenue != (

SELECT SUM(order_amount)

FROM analytics.orders

WHERE user_id = users.id

)

value: 0

If this check fails, the pipeline stops. No bad data flows downstream.

As dbt projects grow, teams encounter:

- ❌ Scalability issues - Projects with 400+ models become slow

- ❌ Long compilation times - Jinja templates add overhead

- ❌ DAG complexity - Dependency resolution slows down

- ❌ Python overhead - dbt is written in Python, which isn't the fastest

- ❌ Fusion engine not open-source - dbt's performance improvements via the Fusion engine are only available in dbt Cloud (paid), creating a revenue play that pushes teams toward the managed service

Bruin is written in Go, which provides:

- ✅ Fast execution - Compiled to native machine code

- ✅ Low overhead - Single binary, no runtime dependencies

- ✅ Efficient orchestration - Built-in scheduler with minimal overhead

- ✅ Quick compilation - Processes large pipelines rapidly

Weather intelligence company Buluttan migrated from dbt to Bruin and achieved:

- 3x faster pipeline execution

- 90% faster deployments

- 15 minutes to respond to issues (vs. hours before)

"Bruin's product has effectively addressed all the challenges my team faced in developing, orchestrating, and monitoring our pipelines."

— Arsalan Noorafkan, Team Lead Data Engineering at Buluttan

These aren't theoretical improvements—they're production results from a real engineering team.

- ✅ CLI for running models and tests

- ✅ Auto-generated documentation

- ✅ Large community (100k+ users)

- ⚠️ Steep learning curve with Jinja

- ⚠️ Limited IDE support beyond dbt Cloud IDE

- ❌ No lineage visualization in open-source

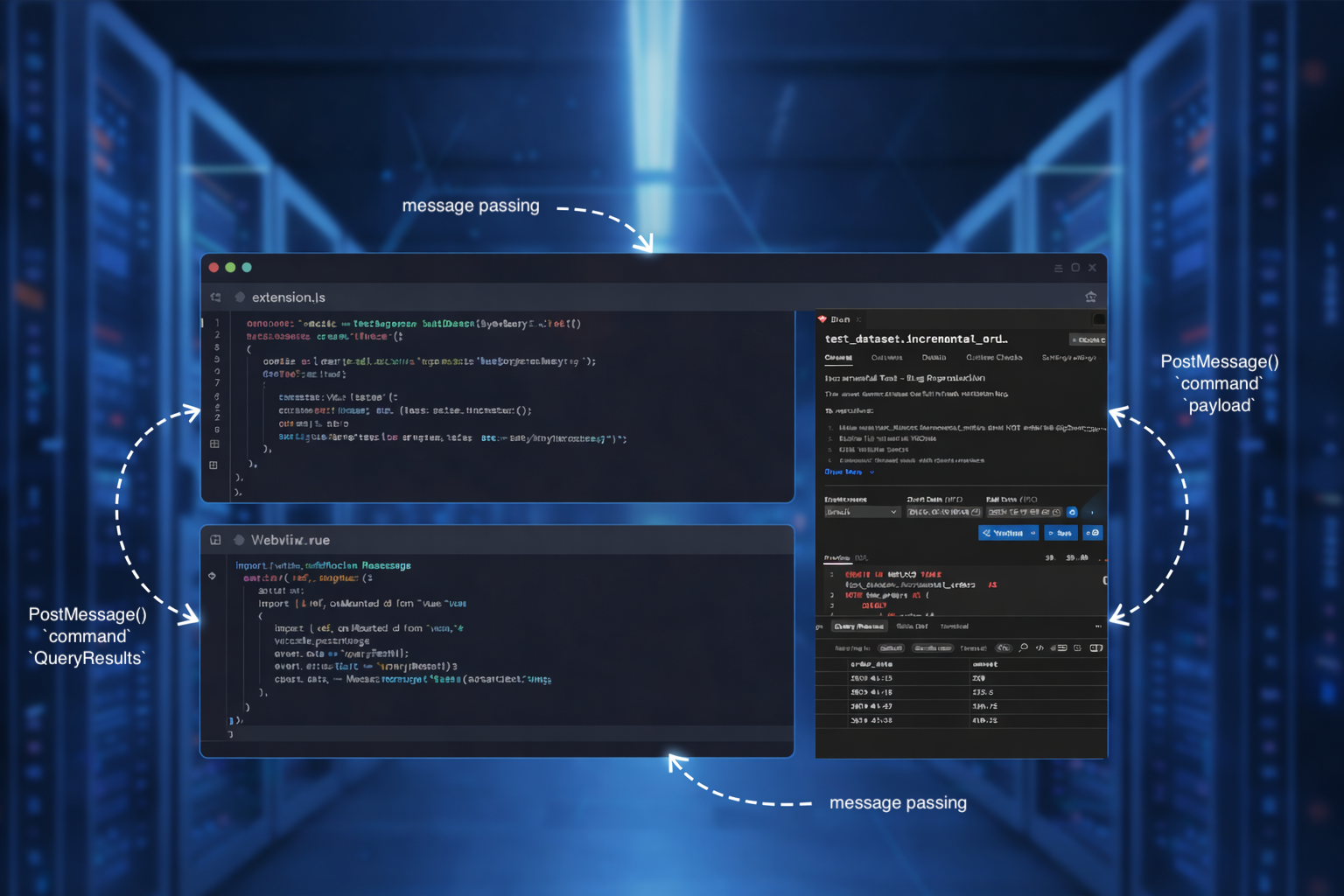

- ✅ VS Code extension with visual lineage, docs, and execution

- ✅ Powerful CLI with validation, dry-run, and backfills

- ✅ Single binary installation - No dependencies, no virtual environments

- ✅ Built-in lineage visualization - Even locally

- ✅ Fast feedback loop - Instant validation

- ✅ Simpler syntax - Less boilerplate, easier to learn

The VS Code extension is a game-changer. You can:

- Visualize your entire pipeline graph

- See column-level lineage

- Execute individual assets or entire pipelines

- View documentation inline

- Validate configurations in real-time

┌─────────────────┐

│ Ingestion Tool │ (Fivetran/Airbyte)

└────────┬────────┘

│

┌────────▼────────┐

│ dbt │ (Transformations only)

└────────┬────────┘

│

┌────────▼────────┐

│ Orchestrator │ (Airflow/Dagster)

└────────┬────────┘

│

┌────────▼────────┐

│ Observability │ (Monte Carlo/dbt Cloud)

└────────┬────────┘

│

┌────────▼────────┐

│ Lineage/Catalog │ (Separate tool or dbt Cloud)

└─────────────────┘

Result: 3-5 tools to manage, configure, integrate, and maintain. Different authentication systems. Multiple points of failure. Complex deployment pipelines.

┌─────────────────────────────┐

│ Bruin CLI │

│ │

│ • Ingestion (100+ sources) │

│ • SQL Transformations │

│ • Python Execution │

│ • Quality Checks │

│ • Orchestration │

│ • Lineage │

│ • Observability │

└─────────────────────────────┘

Result: Single tool. One configuration format. One CLI. One deployment. Everything works together out of the box.

To be fair, there are scenarios where dbt makes sense:

- ✅ You only need transformations - Already have ingestion and orchestration figured out with other tools

- ✅ Your team is SQL-only - No Python requirements whatsoever

- ✅ You want dbt Cloud - Willing to pay for the managed experience

- ✅ You're heavily invested - Already have hundreds of dbt models and migration would be significant effort

Choose Bruin if you want:

- ✅ End-to-end solution - Ingestion, transformation, and quality in one tool

- ✅ SQL + Python - Building ML models, complex analytics, or need flexibility

- ✅ To move fast - Focus on business logic, not infrastructure

- ✅ Better performance - 3x faster execution, proven by real teams

- ✅ Starting fresh or scaling - Building new pipelines or dbt is getting slow

- ✅ Simpler operations - Single binary, no Airflow, minimal complexity

Worried about switching? The migration path is straightforward:

- Jinja support - Your SQL transforms work with minimal changes

- Clear dependency syntax - Asset dependencies are explicit and easy to understand

- Incremental models - Same concept, same patterns

- Better performance - Teams report 3x speedups

Plus, you gain capabilities:

- Native Python support

- Built-in data ingestion

- No more Airflow maintenance

- Integrated quality checks

- Built-in lineage

The modern data stack promised modularity—pick the best tool for each job. In practice, it delivered complexity.

dbt is excellent at what it does, but what it does is limited: SQL transformations. Everything else requires additional tools, infrastructure, and integration work.

Bruin delivers the full pipeline: ingestion, transformation (SQL and Python), quality checks, and orchestration—all in a single, fast, unified tool.

If you're starting a new data project, or if your dbt stack is getting unwieldy, give Bruin a try. It's:

- Open source (MIT licensed)

- Free to use

- Production-ready

- Fast (written in Go)

- Simple (single binary, no dependencies)

The data pipelines of the future won't be held together by duct tape and five different tools. They'll be unified, fast, and simple.

Ready to try Bruin?

Install the CLI:

curl -LsSf https://getbruin.com/install/cli | sh

Try the quickstart:

bruin init my-pipeline

cd my-pipeline

bruin run

Resources:

Did you migrate from dbt to Bruin? We'd love to hear your story. Reach out on Slack or GitHub.