Bruin Agent: How I Survived (and Thrived) in the Zombie Apocalypse

Gather up, everyone. The bonfire's warm, but what I’m about to tell you will freeze you anyway, the night I went toe-to-toe with zombies, and somehow came out on top.

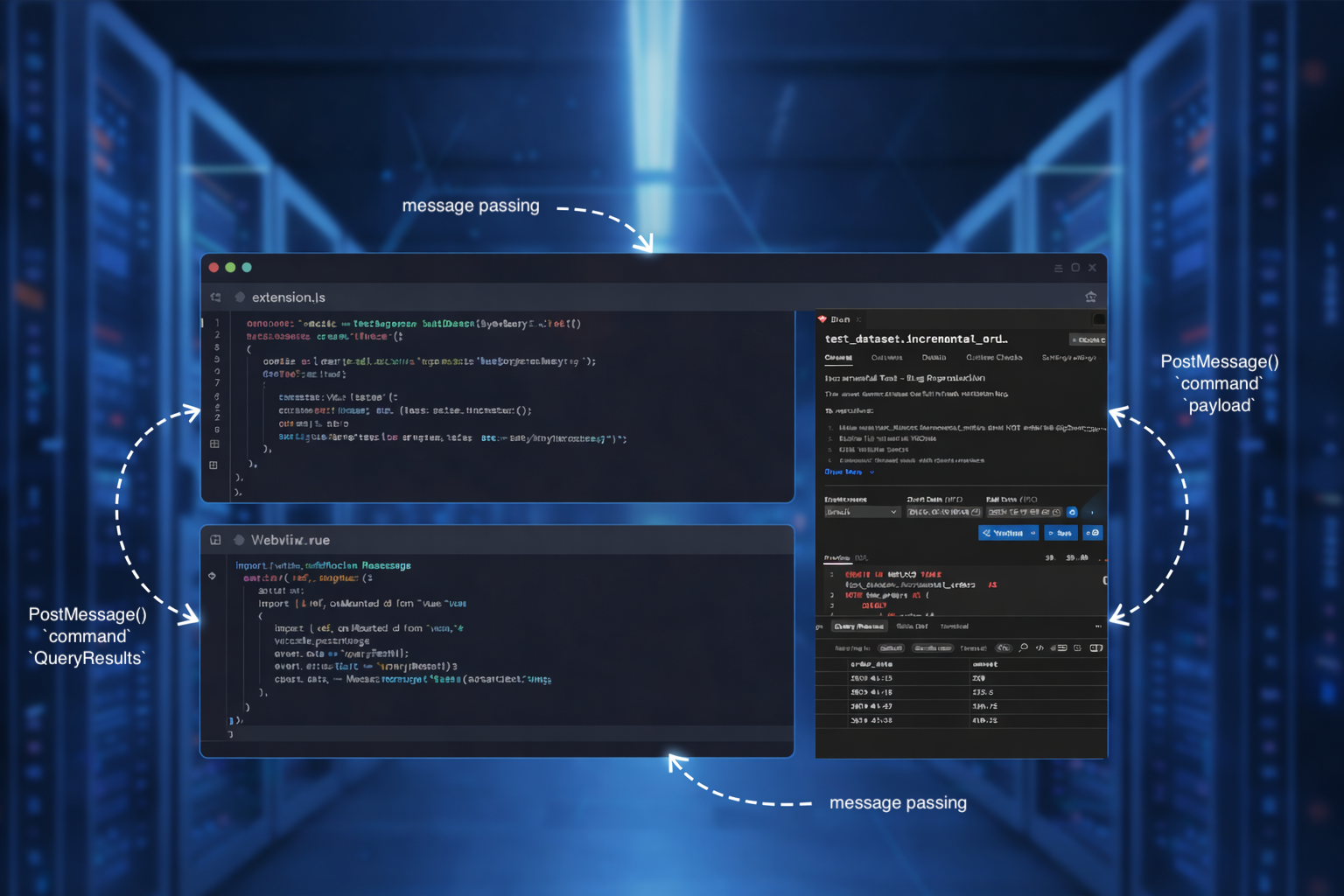

Bruin is an open-source data platform that brings together data ingestion, transformation, quality, and governance. We run different types of workloads for our customers, allowing them to extract insights from the data without having to deal with the boring infrastructure problems.

Here at Bruin, we run different types of data workloads. Our customers usually write SQL and Python definitions, which we call an "asset", and we build a pipeline around these assets. When the time comes for executing these workloads, we take care of provisioning the infrastructure, running the workloads, and gathering observability data for our customers.

Traditionally, these workloads have been completely executed within Bruin-owned cloud environments. We provision multi-tenant environments across different cloud providers and regions, and we distribute the workloads accordingly. The infrastructure is owned and maintained by us, and from our clients' perspective, it is a fully serverless experience.

While this has worked great so far, there has always been a need for being able to distribute these workloads to run on our clients' infrastructure. Due to regulatory reasons, special networking and infrastructure requirements, or purely for the peace of mind, our clients might prefer running all of their own workloads in their own infrastructure. In order to be able to satisfy these needs, we have been working on a distributed execution topology where Bruin Cloud has a hosted control plane, and our customers can host the data plane. We call the control plane "Orchestrator", henceforth “oXr”, and the individual runners "Bruin Agent".

Building these individual pieces has been an interesting journey, plagued with challenges such as authentication, error handling, keeping task statuses in sync between scheduling → oXr → Agent, and reporting task results, all in a multi-tenant environment.

At some point, we noticed that certain failed task attempts came with no logs.

Logs are supposed to be produced by Agent and sent back to oXr for collection, but these tasks had none. We dug into the “history” of these tasks. oXr logged that they were picked up, but logs were never sent, and the tasks were never heard from again, leading oXr to mark them as… ZOMBIE TASKS. These are a special type of failures where oXr loses contact with the Agent running the task, no running heartbeats, no logs, nothing.

This was very strange. Looking at the logs, it seemed that while oXr reported the task as picked up, Agent timed out. Since these are different log streams, it was hard to confirm. To verify, we made Agent generate and log a random request ID and pass it using the standard HTTP header X-Request-Id. We also logged this on the oXr side so we could match them and see the complete request lifecycle.

Our fears were confirmed. We saw the same request timing out on the Client while resulting in a task delivery on the Server. How could this be?

With HTTP as the protocol, this is always a possibility, but it should be astronomically small. Instead, it was happening several times an hour, and the logs showed it had been happening for weeks! Head, meet scratcher.

We played with timeouts on both ends. It turned out the timeouts were not being honored by Agent. We also ensured the Server’s timeout is shorter than Agent’s, so Agent never leaves without a message while the Server thinks it has delivered a task. After these attempts, the frequency fluctuated but never really went away.

After more digging, we realized that for timeout purposes, we weren’t using the correct Go context, namely, the HTTP request context. Instead, the oXr code used the general app context.

What happened was: the request timed out, but the code that marks the task as running wasn’t receiving the context cancellation because it was using the wrong context. Such a silly mistake.

// Before

func (t *TaskDeliverer) DeliverTask(ctx *gin.Context) {

//...

task, err = t.Queue.GetTaskAndMarkAsRunning(requestContext)

// After

func (t *TaskDeliverer) DeliverTask(ctx *gin.Context) {

// ...

task, err = t.Queue.GetTaskAndMarkAsRunning(ctx.Request.Context())

We corrected this, and the tasks with zero logs disappeared! We thought we had cracked it...

We confirmed that no tasks with zero logs existed anymore (the original problem). But something was off...

Suddenly, lots of different zombie tasks started appearing. These weren’t like the others: they were correctly picked up and executed to completion, but they were never reported as successful.

We knew where to look this time. Agent makes a call to report the result back to oXr. In the code for that call, we found that on error, it failed silently. This was the first action we took

// Before

_ = w.client.ReportTaskAsSucceeded(ctx, task)

// After

err := w.client.ReportTaskAsSucceeded(ctx, task)

if err != nil {

slog.Error("Failed to report task state", "error", err)

}

After adding logging, we discovered the request was timing out. Strange, since handling that request only does one database lookup and updates a status column.

As you can already see, after a bit of digging, we found the column we use to select didn’t have an index. With a table as large as tasks, the query had started to time out. We added the missing index. We already had some metrics on zombie tasks. After all this, we put an alert on them.

There's a lot we learned from this thriller:

- Always log errors, even if there’s nothing you can do about them, at least you know they’re happening.

- Get certainty about the problem before jumping to a solution.

- Don’t miss indexes on database columns you use to

SELECT. - Try to have preemptive alerts, spot the problem early.

- Go contexts can be tricky: think carefully about how you use them instead of passing them mindlessly through function calls.