Fivetran vs Bruin: Beyond Data Ingestion

When evaluating data ingestion tools, the comparison often stops at connector counts and pricing models. But there's a more fundamental question that many teams overlook: should your data ingestion tool only handle ingestion?

Fivetran has established itself as a leader in managed data ingestion, offering 700+ pre-built connectors that automatically sync data from various sources to your warehouse. It's a solid choice if you only need the "E" and "L" in ELT. But here's the catch: Fivetran is just one piece of your data stack.

With Fivetran, you're getting excellent ingestion capabilities, but you still need:

- A transformation tool (dbt, Dataform, or custom SQL scripts) - separate subscription required

- An orchestration platform (Airflow, Dagster, or Prefect) - infrastructure and engineering time required

- Data quality tooling (Great Expectations, Monte Carlo, or Soda) - separate service or infrastructure

This means managing 3-5 different tools, each with its own configuration format, maintenance requirements, and integration challenges. Your team spends more time stitching together a Frankenstein stack than actually building data pipelines that deliver value.

The result? A complex ecosystem where:

- Each tool requires separate documentation and expertise

- Integration points become fragile and hard to debug

- Costs compound across multiple vendors

- Context-switching slows down development

- Onboarding new team members takes weeks instead of days

Bruin takes a fundamentally different approach: why not handle the entire pipeline in a single, unified framework?

Bruin is an open-source data pipeline tool that brings together everything you need for modern data work:

- Data Ingestion - 100+ connectors via ingestr, built-in and open-source

- SQL & Python Transformations - native support for both languages in the same pipeline

- Built-in Orchestration - native DAG execution and scheduling without external dependencies

- Data Quality Checks - native quality checks on all assets with automatic failure handling

Everything works together seamlessly. One tool to learn, one CLI, one configuration format. No more context-switching between different tools, no more trying to keep separate systems in sync, no more integration nightmares.

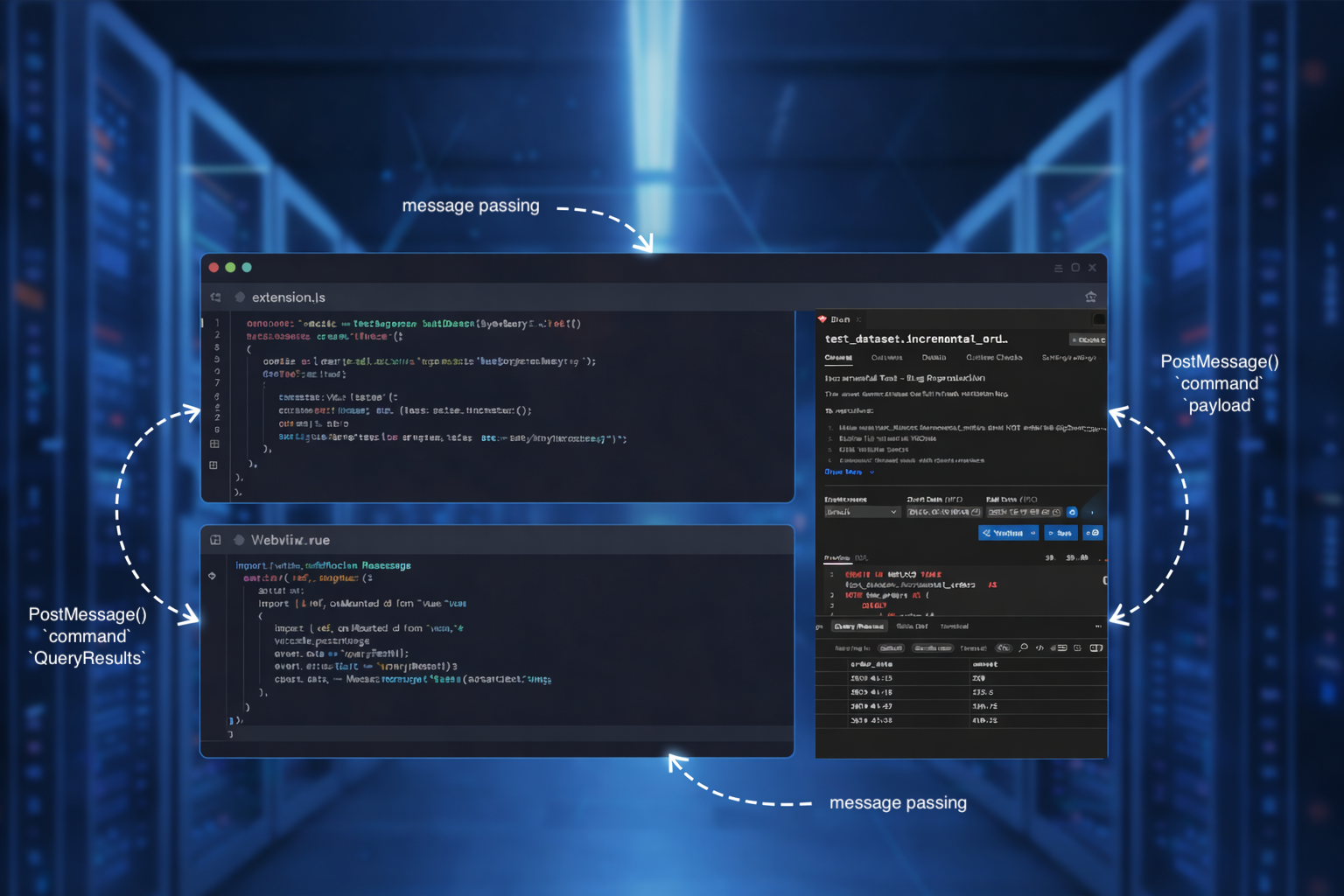

Here's what a complete pipeline looks like in Bruin:

# Ingest data from PostgreSQL

name: raw.users

type: ingestr

parameters:

source_connection: postgresql

source_table: 'public.users'

destination: bigquery

---

# Transform with SQL

name: analytics.active_users

type: bq.sql

SELECT

user_id,

email,

last_login,

account_status

FROM raw.users

WHERE account_status = 'active'

@quality

# Built-in quality checks

row_count > 0

not_null: [user_id, email]

Everything in one place, one syntax, one tool. The ingestion depends on the transform, the quality checks run automatically, and Bruin orchestrates it all.

Here's where Bruin really shines: what happens when Fivetran doesn't have the connector you need?

With Fivetran's 700+ connectors, you're limited to what they support. If your data source isn't in their catalog—whether it's an internal API, a legacy system, or a niche SaaS tool—you're stuck. Your options are:

- Wait for Fivetran to build it (which may never happen)

- Request a connector and hope it aligns with their roadmap

- Build a Function connector (complex and limited)

- Give up and use a separate tool for that one source

None of these options are ideal. And this is exactly where teams hit the wall with Fivetran.

Bruin's Python materialization lets you ingest data from absolutely any source if you can write Python code. No waiting, no restrictions, no workarounds.

Here's a real example of ingesting from a custom internal API:

"""@bruin

name: raw.custom_api_data

image: python:3.13

connection: bigquery

materialization:

type: table

strategy: merge

columns:

- name: id

primary_key: true

- name: created_at

type: timestamp

- name: status

type: string

@bruin"""

import pandas as pd

import requests

def materialize(**kwargs):

# Call your custom API with authentication

headers = {'Authorization': f'Bearer {kwargs["secrets"]["api_token"]}'}

response = requests.get(

'https://internal-api.company.com/data',

headers=headers,

params={'since': kwargs.get('last_run', '2024-01-01')}

)

data = response.json()

# Transform to DataFrame with any business logic

df = pd.DataFrame(data['items'])

# Apply custom transformations

df['created_at'] = pd.to_datetime(df['created_at'])

df['normalized_status'] = df['status'].str.lower()

# Bruin automatically materializes this to BigQuery

# using the merge strategy defined above

return df

That's it. Bruin handles all the heavy lifting:

- Dependency management with uv - install any Python package you need

- Efficient data transfer with Apache Arrow - optimized for large datasets

- Automatic loading to your warehouse using ingestr

- Incremental strategies - merge, append, replace, delete+insert

- Secret management - secure access to credentials

- Error handling - automatic retries and failure notifications

This opens up unlimited possibilities for data ingestion:

Ingest from internal microservices, REST APIs, or GraphQL endpoints that Fivetran doesn't support. Most companies have dozens of internal services that hold critical business data—now you can bring it all into your warehouse.

# Example: GraphQL API

import requests

import pandas as pd

def materialize(**kwargs):

query = """

query {

orders(limit: 1000) {

id

customer_id

total_amount

created_at

}

}

"""

response = requests.post(

'https://api.internal.com/graphql',

json={'query': query}

)

return pd.DataFrame(response.json()['data']['orders'])

Extract data from mainframes, FTP servers, or proprietary databases with custom connection logic. Many enterprises have decades-old systems that still hold valuable data.

# Example: FTP file ingestion

import pandas as pd

from ftplib import FTP

def materialize(**kwargs):

ftp = FTP('ftp.legacy-system.com')

ftp.login(user='username', passwd=kwargs['secrets']['ftp_password'])

# Download CSV file

with open('local_file.csv', 'wb') as f:

ftp.retrbinary('RETR /data/export.csv', f.write)

return pd.read_csv('local_file.csv')

Scrape websites or parse HTML/XML data sources that don't have APIs. Sometimes the data you need is only available on web pages.

# Example: Web scraping with BeautifulSoup

import pandas as pd

from bs4 import BeautifulSoup

import requests

def materialize(**kwargs):

response = requests.get('https://example.com/data-page')

soup = BeautifulSoup(response.content, 'html.parser')

# Extract data from HTML tables

table = soup.find('table', {'class': 'data-table'})

rows = []

for row in table.find_all('tr')[1:]:

cols = [col.text.strip() for col in row.find_all('td')]

rows.append(cols)

return pd.DataFrame(rows, columns=['id', 'name', 'value'])

Apply complex business logic during ingestion—data enrichment, lookups, aggregation, or any transformation before loading.

# Example: Enriching data during ingestion

import pandas as pd

import requests

def materialize(**kwargs):

# Get raw data

raw_data = requests.get('https://api.example.com/transactions').json()

df = pd.DataFrame(raw_data)

# Enrich with external data

for idx, row in df.iterrows():

geo_data = requests.get(

f'https://geocode.api.com?address={row["address"]}'

).json()

df.at[idx, 'latitude'] = geo_data['lat']

df.at[idx, 'longitude'] = geo_data['lng']

return df

You have full control over the extraction logic and can use any Python library: Pandas, Polars, requests, BeautifulSoup, Selenium, or any other tool in the Python ecosystem. This is a game-changer for teams dealing with custom data sources.

Fivetran is cloud-only. Your data must flow through their infrastructure, and you're locked into their platform. No on-premises deployment, no air-gapped environments, no choice. This is a non-starter for many organizations with:

- Strict data sovereignty requirements

- Compliance regulations that prevent data from leaving their network

- Security policies requiring on-premises processing

- Cost constraints around data egress

Bruin runs wherever you want:

- Local development on your laptop - iterate and test without any cloud dependency

- GitHub Actions for CI/CD - run pipelines on every commit

- AWS EC2 / Azure VM / GCP Compute - deploy in your existing cloud infrastructure

- Kubernetes - containerized deployment with auto-scaling

- On-premises / air-gapped - keep sensitive data within your network

- Bruin Cloud - fully managed option if you want zero maintenance

You control where your data lives and how it's processed. No vendor lock-in. No forced cloud deployments. No compromises on security and compliance.

Fivetran is a black box. You can't see how their connectors work, you can't modify them to fit your needs, and you're entirely dependent on their roadmap for new features. If something breaks, you're at the mercy of their support team.

Bruin is fully open-source:

- Full code visibility - see exactly how everything works

- Fork and modify - customize connectors for your specific needs

- Build custom connectors - contribute back to the community

- Community-driven - features are built based on real user needs

- No vendor lock-in - you own your pipelines and can run them anywhere

- Security audits - verify the code yourself, no blind trust required

When you're dealing with critical business data, transparency matters. With open source, you're never blocked by a vendor's timeline or priorities.

- Volume-based pricing - pay per million rows synced, costs scale with data

- Connector tier pricing - premium connectors cost more

- Additional tools required:

- dbt Cloud: $50-100/developer/month

- Airflow/Dagster: $500-2000/month in infrastructure

- Data quality tools: $500-2000/month

Total for a mid-sized team: $3,000-8,000/month for just the tooling, not including data warehouse costs.

- Self-hosted: $0 - completely free, run on your infrastructure

- Bruin Cloud (optional): Predictable pricing without per-row charges

- All-in-one: No additional tools needed

Total for a mid-sized team: $0 for self-hosted, or predictable monthly cost for Bruin Cloud with no surprise bills.

| Feature | Fivetran | Bruin |

|---|

| Data Ingestion | Yes (700+ connectors) | Yes (100+ connectors) |

| Custom Connectors | Limited | Unlimited with Python |

| Open Source | No (Proprietary) | Yes (Fully open source) |

| SQL Transformations | No (Requires dbt) | Yes (Built-in) |

| Python Support | No | Yes (Full support) |

| Data Quality Checks | No (Requires separate tool) | Yes (Built-in) |

| Orchestration | No (Requires Airflow/Dagster) | Yes (Built-in) |

| Deployment Options | Cloud-only | Anywhere |

| Customization | Limited | Full control |

| Pricing Model | Volume-based | Free or flat rate |

- You only need basic data ingestion (not transformation, quality, or orchestration)

- You're okay with cloud-only deployment with no exceptions

- You prefer fully managed services and have the budget for it ($3k-8k+/month)

- All your data sources are in Fivetran's 700+ connector catalog

- You're willing to invest in additional tools (dbt, Airflow, quality monitoring)

- You're comfortable with volume-based pricing that can spike unexpectedly

- Vendor lock-in isn't a concern for your organization

- You want end-to-end pipelines (ingestion + transformation + quality) in one unified tool

- You need to ingest from custom sources not supported by Fivetran

- You value deployment flexibility and want to avoid vendor lock-in

- You're tired of managing Fivetran + dbt + Airflow + quality tools separately

- You want transparency and open-source code you can inspect and modify

- You need predictable costs without surprise bills based on data volume

- You want full control over your data pipelines and infrastructure

- You need Python support for complex transformations and custom logic

- You're looking to simplify your stack and reduce operational complexity

If you love the idea of Bruin's end-to-end approach but want a fully managed platform, Bruin Cloud offers the best of both worlds:

Bruin Cloud includes:

- Managed ingestion from 100+ sources

- Managed transformations (SQL & Python)

- Built-in quality checks and validation

- Automated orchestration and scheduling

- Monitoring and alerting

- Zero infrastructure management

Unlike Fivetran, Bruin Cloud gives you complete pipelines, not just ingestion. And unlike self-hosting, you get zero maintenance. The choice is yours: self-host for free, or use Bruin Cloud for a fully managed experience.

Let me show you what a real-world pipeline looks like with Bruin vs. the Fivetran stack:

Tools needed: Fivetran + dbt + Airflow + Monte Carlo

- Fivetran: Configure connector in UI to ingest from PostgreSQL

- dbt: Write SQL models for transformations

- Airflow: Write DAGs to orchestrate dbt runs after Fivetran syncs

- Monte Carlo: Set up data quality monitors

- Glue code: Build custom integrations between all tools

Result: 4 separate tools, 4 configurations, 4 places to debug when things break.

Tools needed: Just Bruin

# pipeline.yml - Everything in one place

# 1. Ingest from PostgreSQL

name: raw.orders

type: ingestr

parameters:

source_connection: postgresql

source_table: 'public.orders'

destination: bigquery

---

# 2. Transform with SQL

name: analytics.daily_revenue

type: bq.sql

depends:

- raw.orders

SELECT

DATE(order_date) as date,

COUNT(*) as order_count,

SUM(total_amount) as revenue

FROM raw.orders

WHERE status = 'completed'

GROUP BY date

@quality

# 3. Quality checks built-in

row_count > 0

revenue >= 0

not_null: [date, order_count, revenue]

---

# 4. Custom enrichment with Python

name: analytics.enriched_orders

type: python

depends:

- raw.orders

"""@bruin

connection: bigquery

materialization:

type: table

@bruin"""

import pandas as pd

import requests

def materialize(context):

# Read from BigQuery

df = context.read_sql("SELECT * FROM raw.orders")

# Enrich with external API

for idx, row in df.iterrows():

customer_data = requests.get(

f'https://api.crm.com/customers/{row.customer_id}'

).json()

df.at[idx, 'customer_segment'] = customer_data['segment']

return df

Result: One tool, one config, one place to look. Everything orchestrated automatically based on dependencies.

Ready to simplify your data stack? Here's how to get started:

# Install Bruin CLI

pip install bruin

# Initialize a new project

bruin init my-pipeline

# Run your pipeline

bruin run

That's it. No complex setup, no multiple tools to configure, no integration headaches.

Try it today:

The future of data pipelines isn't about having the most connectors or the fanciest UI. It's about simplicity, transparency, and flexibility.

Fivetran pioneered managed data ingestion, but the world has moved beyond point solutions. Modern data teams need:

- End-to-end capabilities in a unified platform

- Custom connector flexibility for unique data sources

- Deployment freedom to run anywhere

- Open-source transparency for security and trust

- Predictable costs without volume-based surprises

That's exactly what Bruin delivers—an open-source, end-to-end data pipeline tool that handles ingestion, transformation, quality, and orchestration in one elegant package. With Python custom connectors, you're never limited by a vendor's roadmap.

The choice is yours: continue managing a complex stack of disparate tools, or simplify with Bruin's unified approach. Your data team will thank you.