The Hidden Costs of DIY Data Pipelines

I've talked to hundreds of data teams over the past few years, and there's a pattern I see over and over again. It starts with a simple requirement: "We need to move data from our production database to our warehouse." The initial estimate? A couple of weeks of engineering time, maybe $10,000 in total cost.

Fast forward 18 months, and that "simple" pipeline has consumed hundreds of thousands of dollars, multiple engineers are maintaining it, and the business still doesn't trust the data.

Let me show you where all that money goes.

Every DIY pipeline story starts the same way. An engineer looks at the problem and thinks: "This is just moving data from A to B. How complex can it be?"

The first version is genuinely simple:

import pandas as pd

from sqlalchemy import create_engine

# Read from source

source = create_engine('postgresql://source_db')

data = pd.read_sql('SELECT * FROM orders', source)

# Write to destination

destination = create_engine('bigquery://project/dataset')

data.to_sql('orders', destination, if_exists='replace')

Twenty lines of code. One afternoon of work. Problem solved, right?

This is where the trap springs. That simple script works perfectly for the first few weeks. The team celebrates the quick win. Management is happy about the cost savings compared to buying a tool.

But data has this nasty habit of growing. And changing. And breaking in ways you never anticipated.

The first cracks appear quickly:

- The script starts failing when the orders table grows beyond what fits in memory

- Someone adds a new column upstream and the pipeline silently drops it

- The nightly run fails and nobody notices until the Monday morning standup

- The CEO asks why yesterday's numbers aren't in the dashboard

Now you need to add:

- Chunking for large tables

- Schema validation

- Error notifications

- Monitoring

Your 20-line script is now 500 lines. But that's fine – it's still manageable, you tell yourself.

The business loves having data in the warehouse. Now they want more:

- "Can we also sync the customers table?"

- "We need hourly updates, not daily"

- "Can you add data from our CRM?"

- "We need historical data preserved"

Each request seems reasonable. Each addition makes the system exponentially more complex. You now have:

- Multiple data sources

- Different sync schedules

- Incremental update logic

- State management

You've accidentally built a distributed system.

Let me share some numbers from companies I've worked with. These are real costs from real companies:

Engineering Time:

- Initial development (2 engineers × 3 months): $120,000

- Bug fixes and maintenance (20% of 2 engineers): $80,000

- Performance optimization sprints: $40,000

- Adding monitoring and alerting: $20,000

Infrastructure:

- Servers and compute: $24,000/year

- Storage and networking: $12,000/year

- Monitoring tools: $6,000/year

Total Year 1: $302,000

But here's the thing – these are just the direct costs. The hidden costs are where it gets interesting.

This is the killer that nobody tracks in their spreadsheets. Those two engineers spending 3 months building pipelines? They could have been building features that directly impact revenue.

I spoke with a SaaS company last month. They had two senior engineers maintaining their data pipelines full-time. Their average feature takes one engineer-month to build and generates roughly $200,000 in annual recurring revenue.

That means their DIY pipelines are costing them $2.4 million per year in lost features. Nobody puts that in the build vs. buy analysis.

When pipelines fail – and they will fail – the business loses trust in the data. I've seen this play out dozens of times:

- Marketing stops using the attribution dashboard and goes back to Excel

- Sales creates their own "shadow IT" reporting system

- Product managers start keeping their own metrics

- The CEO stops looking at the KPI dashboard

Each of these represents hours of duplicated work every week. One company I worked with calculated that their "trust tax" – the cost of people not trusting the central data platform – was over $300,000 per year in lost productivity.

Year 2 is when reality hits. You're not building anymore; you're just keeping things running:

Ongoing Costs:

- Maintenance (30% of 2 engineers): $120,000

- Infrastructure (growing with data): $60,000

- Incident response (4 major incidents): $40,000

- Performance rewrites: $50,000

- Documentation and knowledge transfer: $30,000

Year 2 Total: $300,000

But it gets worse. Remember that engineer who built the original pipeline? They're getting bored. They didn't become an engineer to babysit data copying scripts. They want to build new things, solve interesting problems.

So they leave.

Now you need to:

- Hire a replacement ($50,000 in recruiting costs)

- Have them reverse-engineer the undocumented pipeline ($30,000 in time)

- Fix all the things they break while learning ($20,000 in incidents)

By Year 3, everyone knows the current system isn't sustainable. The pipeline that handles 1GB of data was never designed for the 100GB you're processing now. You have three options:

Option A: Keep patching

Continue applying band-aids. This costs about $400,000/year and makes engineers miserable.

Option B: Rebuild properly

Spend 6 months rebuilding with "proper" architecture. This costs $500,000 and you'll be having the same discussion in 3 years.

Option C: Migrate to a tool

Admit defeat and use a purpose-built solution. This costs $100,000 for the first year including migration.

Guess which option most companies choose? If you guessed Option B, you're right. The sunk cost fallacy is powerful.

Let's add it all up:

Direct Costs:

- Year 1: $302,000

- Year 2: $300,000

- Year 3: $400,000

- Subtotal: $1,002,000

Hidden Costs:

- Opportunity cost (3 years): $600,000

- Trust tax and productivity loss: $300,000

- Engineer turnover and knowledge transfer: $100,000

- Subtotal: $1,000,000

Total 3-Year Cost: ~$2,000,000

Two million dollars for moving data from A to B.

Now let's look at using a purpose-built tool from day one:

Year 1:

- Tool licensing: $60,000

- Implementation and migration: $40,000

- Training: $10,000

- Total: $110,000

Year 2:

- Tool licensing: $60,000

- Minimal maintenance: $20,000

- Total: $80,000

Year 3:

- Tool licensing: $60,000

- Minimal maintenance: $20,000

- Total: $80,000

3-Year Total: $270,000

That's $1.7 million less than building your own.

But the real difference isn't in the money – it's in what you can do with the time. Those two engineers? They're building features. That trust in data? It's intact. Those 3 AM pipeline failures? Someone else's problem.

I'm not saying never build your own pipelines. There are valid reasons to build:

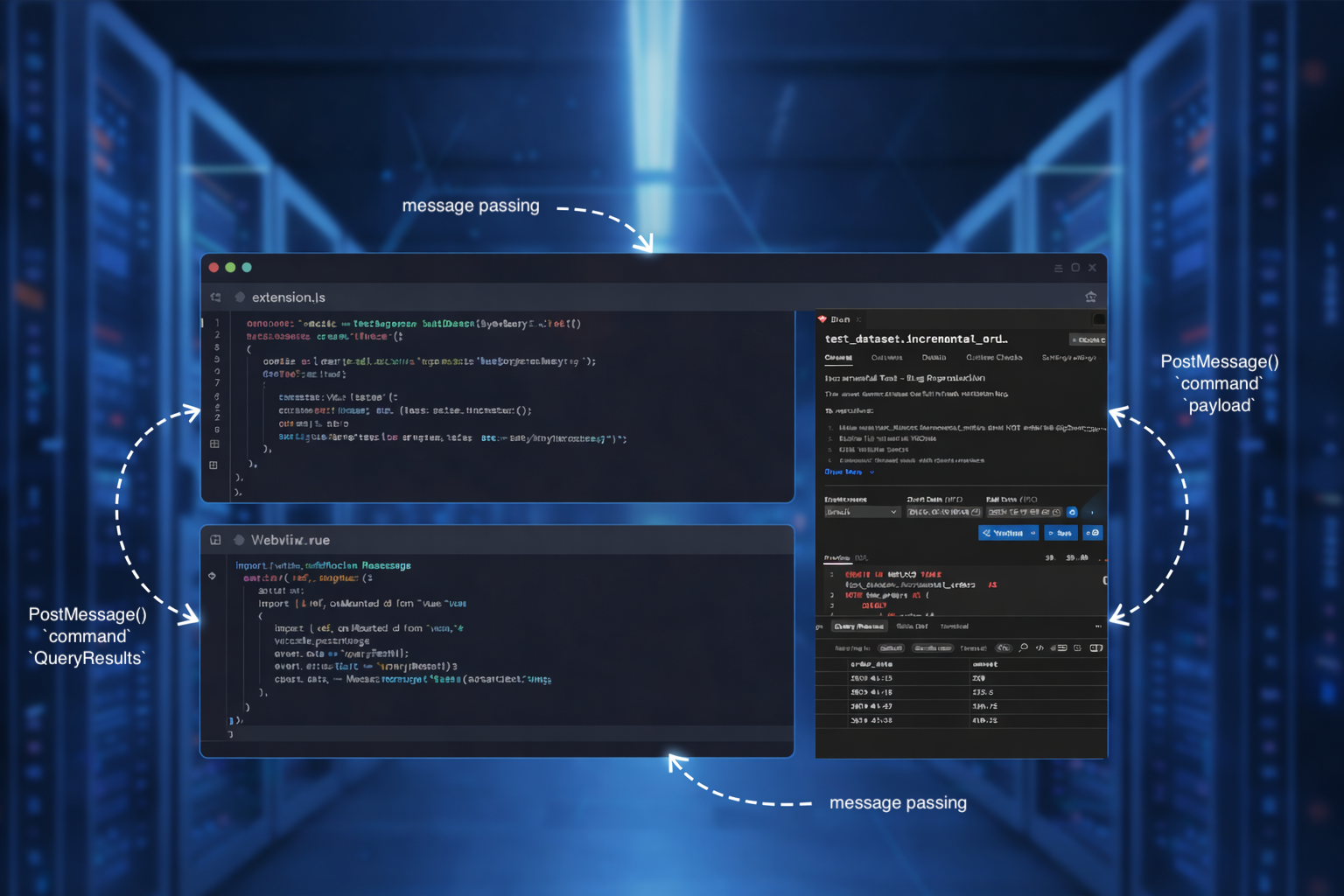

- You're building a data pipeline company (like we did with Bruin)

- Your requirements are genuinely unique (they probably aren't)

- You have a team whose full-time job is data infrastructure

- Data pipelines are your competitive advantage

For everyone else – the 95% of companies where data is important but not the core business – building your own pipelines is an expensive distraction.

Here's what nobody wants to admit: most data pipeline requirements are boring and similar. You need to:

- Move data reliably from sources to destinations

- Handle schema changes gracefully

- Process incrementally as data grows

- Monitor and alert on failures

- Ensure data quality

These are solved problems. Very smart people have spent years thinking about nothing but these problems. They've built tools that handle edge cases you haven't even imagined yet.

Your competitive advantage isn't in solving these generic problems. It's in understanding your business, your customers, your market. Every hour your engineers spend debugging why yesterday's pipeline run duplicated 10,000 records is an hour not spent on things that actually matter to your business.

If you're reading this while maintaining a collection of homegrown pipelines, you're probably feeling a mix of recognition and dread. The good news is that migration is easier than you think:

- Start with new pipelines – build them the right way

- Migrate your most painful pipelines first

- Keep your business logic, replace the plumbing

- Gradually sunset the old system

Most teams can migrate in 3-6 months while maintaining operations. The relief is immediate.

I've built my share of DIY pipelines. I've also spent years building Bruin to solve these exact problems. But even if you don't use Bruin, please use something.

The real cost of DIY pipelines isn't the money – though that's substantial. It's the opportunity cost. It's the features not built, the insights not discovered, the problems not solved.

Your engineers are too valuable to spend their time rebuilding solved problems. Your business is too important to depend on fragile, homegrown infrastructure.

The best pipeline is the one you don't have to maintain.