This debate comes up constantly in data teams. A new engineer joins and wants to rewrite everything in their preferred language. The "SQL people" clash with the "Python people." Projects get delayed, technical debt accumulates, and it's hard to reach consensus.

Here's what I've observed: both sides often have valid points.

The challenge isn't determining which tool is objectively better. It's that teams frequently choose based on comfort rather than the actual requirements. This can lead to maintenance challenges, performance issues, and team friction.

Let's explore a more systematic way to make this decision.

Recently, I reviewed code from a team that had spent three weeks building a 500-line Python script to process 10GB of data. It took two hours to run and consumed $300/month in compute costs.

We rewrote it as a SQL query. Runtime dropped to 30 seconds with negligible costs.

But the opposite happens too: I've seen a 1,000-line SQL file with nested CTEs 15 levels deep, attempting to implement complex conditional business logic. It was difficult to maintain, test, and debug.

There's a pattern here: teams often choose their tool based on what they know, rather than what the job requires. This can have real costs:

- Performance degradation: 10x-100x slower execution

- Infrastructure costs: Processing in Python what should be in SQL can cost thousands monthly

- Engineering time: More code to write, test, and maintain

- Team friction: Debates in code reviews about tool choice

Here's a simple decision framework we'll expand on:

Use SQL if you're transforming entire datasets with standard operations.

Use Python if you need procedural logic, external integrations, or custom algorithms.

Use both when you can clearly separate concerns.

Let's look at when each tool works best.

SQL sometimes gets dismissed as "old school" or "not a real programming language."

But SQL is a domain-specific language built specifically for data transformation. For that particular task, it's remarkably effective.

SQL excels at set-based operations. When you need to operate on entire tables, SQL is purpose-built for the job:

-- Building a daily user activity table

SELECT

DATE(event_timestamp) as date,

user_id,

COUNT(*) as event_count,

COUNT(DISTINCT session_id) as session_count,

MAX(event_timestamp) as last_activity

FROM events

WHERE DATE(event_timestamp) = CURRENT_DATE()

GROUP BY 1, 2

This kind of transformation is SQL's sweet spot. It's declarative - you describe what you want, not how to get it. The query optimizer figures out the most efficient execution path.

Writing this in Python would require:

- Manual iteration over millions of rows

- Memory management considerations

- No automatic optimization

- Significantly slower execution

Dimension tables are all about joins and aggregations. This is what SQL was designed for:

CREATE TABLE dim_users AS

SELECT

u.user_id,

u.email,

u.created_at,

COUNT(DISTINCT o.order_id) as lifetime_orders,

SUM(o.total_amount) as lifetime_value,

MAX(o.created_at) as last_order_date,

FIRST_VALUE(o.utm_source) OVER (

PARTITION BY u.user_id

ORDER BY o.created_at

) as acquisition_source

FROM users u

LEFT JOIN orders o ON u.user_id = o.user_id

GROUP BY u.user_id, u.email, u.created_at

If you've read our Firebase analytics post, you know these are critical. SQL makes them trivial:

CREATE TABLE users_daily AS

SELECT

user_pseudo_id,

event_date,

COUNT(*) as total_events,

COUNTIF(event_name = 'session_start') as sessions,

COUNTIF(event_name = 'purchase') as purchases,

SUM(CASE WHEN event_name = 'purchase'

THEN (SELECT value.int_value FROM UNNEST(event_params)

WHERE key = 'value')

END) as revenue

FROM `project.analytics_XXXXXX.events_*`

WHERE _TABLE_SUFFIX = FORMAT_DATE('%Y%m%d', CURRENT_DATE())

GROUP BY 1, 2

SQL's set-based nature makes quality checks clean and fast:

-- Find duplicates

SELECT user_id, COUNT(*)

FROM users

GROUP BY user_id

HAVING COUNT(*) > 1

-- Find orphaned records

SELECT o.order_id

FROM orders o

LEFT JOIN users u ON o.user_id = u.user_id

WHERE u.user_id IS NULL

Data warehouses are optimized for incremental processing:

-- Only process new data

INSERT INTO daily_aggregates

SELECT

date,

metric,

value

FROM source_data

WHERE date > (SELECT MAX(date) FROM daily_aggregates)

The warehouse handles partitioning, clustering, and optimization. You just describe the logic.

But SQL isn't a universal solution. It breaks down when you need:

Complex procedural logic: Nested if-else statements with multiple conditions become difficult to read and maintain.

API calls: SQL isn't designed to call external services.

Custom algorithms: Implementing something like a recommendation engine or custom deduplication logic in SQL is challenging.

Dynamic schema handling: SQL expects schemas to be known. Processing arbitrary JSON structures or dynamically changing schemas can be difficult.

When you encounter these limitations, Python is often a better choice.

Python is the opposite of SQL. Where SQL is specialized, Python is general-purpose. Where SQL is declarative, Python is imperative.

This flexibility is both its strength and its danger.

Python excels at procedural logic and integration. When you need to do things step-by-step, make decisions, or interact with external systems, Python is a natural fit.

Imagine you're implementing a customer segmentation algorithm with multiple conditional branches:

def segment_customer(customer):

if customer['lifetime_value'] > 10000:

if customer['days_since_purchase'] < 30:

return 'vip_active'

else:

return 'vip_churning'

elif customer['lifetime_value'] > 1000:

if customer['purchase_frequency'] > 4:

return 'frequent_buyer'

else:

return 'occasional_buyer'

else:

if customer['days_since_signup'] < 30:

return 'new_user'

else:

return 'low_value'

You could write this in SQL with CASE statements, but it would be harder to maintain.

Python has extensive ML libraries. Feature engineering, model training, and predictions are all straightforward in Python:

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

# SQL for data prep

data = warehouse.query("""

SELECT user_id, feature1, feature2, churned

FROM ml_training_data

""")

# Python for ML

X = data[['feature1', 'feature2']]

y = data['churned']

model = RandomForestClassifier()

model.fit(X, y)

predictions = model.predict(new_data)

When you need to call external APIs, Python is well-suited:

import requests

import pandas as pd

def enrich_with_clearbit(emails):

enriched_data = []

for email in emails:

response = requests.get(

f'https://person.clearbit.com/v2/people/find',

params={'email': email},

headers={'Authorization': f'Bearer {API_KEY}'}

)

if response.ok:

data = response.json()

enriched_data.append({

'email': email,

'company': data.get('employment', {}).get('name'),

'title': data.get('employment', {}).get('title'),

'seniority': data.get('employment', {}).get('seniority')

})

return pd.DataFrame(enriched_data)

Custom validation rules that go beyond simple SQL checks:

def validate_order_data(orders_df):

issues = []

# Check for suspiciously high order values

for idx, row in orders_df.iterrows():

if row['total_amount'] > row['expected_range_max'] * 2:

# Call fraud detection API

fraud_score = check_fraud(row)

if fraud_score > 0.8:

issues.append({

'order_id': row['order_id'],

'issue': 'potential_fraud',

'score': fraud_score

})

# Check for invalid product combinations

for idx, row in orders_df.iterrows():

products = set(row['product_ids'])

if 'warranty' in products and 'digital_product' in products:

issues.append({

'order_id': row['order_id'],

'issue': 'invalid_product_combination'

})

return issues

Working with deeply nested or irregular JSON structures:

def flatten_firebase_params(event_params):

"""Extract parameters from Firebase nested structure"""

result = {}

for param in event_params:

key = param['key']

# Value can be in different fields

if 'string_value' in param:

result[key] = param['string_value']

elif 'int_value' in param:

result[key] = param['int_value']

elif 'float_value' in param:

result[key] = param['float_value']

elif 'double_value' in param:

result[key] = param['double_value']

return result

But Python has real downsides for data transformation:

Performance on large datasets: Loading 10GB into pandas will crash or crawl. Python's single-threaded nature doesn't help.

No automatic optimization: You write the loop, you get the loop. No query optimizer to save you.

More code to maintain: What SQL does in 10 lines takes Python 50-100 lines.

Slower iteration: Running SQL is instant. Running Python requires dependencies, environments, and setup.

The key is recognizing when Python's flexibility is worth these costs.

Here are two examples that illustrate common patterns I've observed.

A startup with a strong ML team decided everything should be Python. "We're a Python shop," they said.

Here's what they built for daily user aggregations:

import pandas as pd

from google.cloud import bigquery

def aggregate_user_metrics(date):

client = bigquery.Client()

# Read entire events table into memory

query = f"""

SELECT * FROM events

WHERE DATE(timestamp) = '{date}'

"""

events_df = client.query(query).to_dataframe()

# Group by user

results = []

for user_id in events_df['user_id'].unique():

user_events = events_df[events_df['user_id'] == user_id]

session_starts = len(user_events[user_events['event_name'] == 'session_start'])

purchases = len(user_events[user_events['event_name'] == 'purchase'])

revenue = 0

for idx, row in user_events[user_events['event_name'] == 'purchase'].iterrows():

for param in row['event_params']:

if param['key'] == 'value':

revenue += param['int_value']

results.append({

'user_id': user_id,

'date': date,

'session_starts': session_starts,

'purchases': purchases,

'revenue': revenue

})

results_df = pd.DataFrame(results)

results_df.to_gbq('analytics.users_daily', if_exists='append')

This code:

- Loads 10GB of data into memory (often crashes)

- Processes row-by-row instead of in sets

- Takes 2 hours to run

- Costs $300/month in compute

The SQL version:

INSERT INTO analytics.users_daily

SELECT

user_id,

DATE(timestamp) as date,

COUNTIF(event_name = 'session_start') as session_starts,

COUNTIF(event_name = 'purchase') as purchases,

SUM(CASE WHEN event_name = 'purchase'

THEN (SELECT int_value FROM UNNEST(event_params) WHERE key = 'value')

END) as revenue

FROM events

WHERE DATE(timestamp) = CURRENT_DATE()

GROUP BY 1, 2

Runtime: 30 seconds. Cost: negligible. Maintainability: improved.

The real cost wasn't just the $300/month. It was the time spent optimizing Python, the incidents when it crashed, and the debugging effort for memory issues.

On the other hand, I've worked with teams that tried to use SQL exclusively, even for complex procedural logic.

One team needed to implement customer churn prediction with complex logic:

WITH customer_features AS (

SELECT

user_id,

-- 50 more feature calculations

FROM users

),

behavior_scores AS (

SELECT

user_id,

CASE

WHEN feature1 > threshold1 AND feature2 < threshold2 THEN

CASE

WHEN feature3 IN (SELECT value FROM lookup1) THEN

CASE

WHEN feature4 > (SELECT AVG(feature4) FROM customer_features) THEN 0.8

ELSE 0.6

END

ELSE

CASE

WHEN feature5 BETWEEN value1 AND value2 THEN 0.4

ELSE 0.2

END

END

ELSE

-- 10 more levels of CASE WHEN

END as churn_score

FROM customer_features

)

-- 500 more lines of nested CTEs

SELECT * FROM final_scores

This 1,000-line SQL file had several challenges:

- Difficult to test (limited unit testing options for SQL logic)

- Hard to debug (which of 15 nested CTEs had the issue?)

- Difficult to understand (complex nested logic)

- Fragile (schema changes required extensive updates)

The team spent three days tracking down a bug that was a misplaced parenthesis in a CASE statement.

The solution was to move the complex logic to Python:

def calculate_churn_score(customer):

score = 0.0

if customer['feature1'] > threshold1 and customer['feature2'] < threshold2:

if customer['feature3'] in lookup_values:

if customer['feature4'] > avg_feature4:

score = 0.8

else:

score = 0.6

else:

if threshold_min <= customer['feature5'] <= threshold_max:

score = 0.4

else:

score = 0.2

return score

# Test it

def test_churn_score():

assert calculate_churn_score({'feature1': 10, ...}) == 0.8

assert calculate_churn_score({'feature1': 5, ...}) == 0.4

Now it's readable, testable, and maintainable.

Here's a practical framework for making this decision:

✅ You're operating on entire tables or large datasets

SQL is built for set-based operations. If you're transforming millions of rows, use SQL.

✅ You're doing standard aggregations and transformations

GROUP BY, JOIN, window functions - SQL's bread and butter.

✅ Performance is critical

SQL with pushdown optimization will almost always beat Python.

✅ Logic maps cleanly to relational operations

If you can express it naturally with SELECT, WHERE, GROUP BY, use SQL.

✅ Multiple people need to understand and modify it

SQL is more widely understood than Python data manipulation patterns.

✅ Row-by-row processing is unavoidable

Some logic genuinely needs to look at individual records with complex state.

✅ You need external system integration

API calls, file system operations, external tools - Python's domain.

✅ Complex procedural logic

If-else trees, loops with state, multi-step algorithms - use Python.

✅ Custom algorithms beyond SQL capabilities

ML models, graph algorithms, custom deduplication - Python is better suited.

✅ Working with unstructured or semi-structured data

Deeply nested JSON, text processing, image data - Python has the libraries.

✅ SQL can do the heavy lifting, Python the finishing touches

Filter and aggregate in SQL, apply complex logic in Python.

✅ Clear handoff points exist

SQL for data prep, Python for ML. SQL for final aggregation after Python processing.

✅ You can separate concerns cleanly

Keep them in separate steps rather than mixing (no Python UDFs in SQL).

Here are three patterns that have worked well in production environments.

Start with SQL, add Python only where necessary.

Example: Daily aggregations with anomaly detection

-- daily_metrics.sql

-- Use SQL for the heavy lifting

SELECT

date,

metric_name,

metric_value,

AVG(metric_value) OVER (

PARTITION BY metric_name

ORDER BY date

ROWS BETWEEN 7 PRECEDING AND 1 PRECEDING

) as avg_last_7_days,

STDDEV(metric_value) OVER (

PARTITION BY metric_name

ORDER BY date

ROWS BETWEEN 7 PRECEDING AND 1 PRECEDING

) as stddev_last_7_days

FROM metrics

WHERE date >= CURRENT_DATE() - 30

# anomaly_detection.py

# Use Python for the complex logic

def detect_anomalies(metrics_df):

anomalies = []

for idx, row in metrics_df.iterrows():

if row['stddev_last_7_days'] > 0:

z_score = (row['metric_value'] - row['avg_last_7_days']) / row['stddev_last_7_days']

if abs(z_score) > 3:

# Complex anomaly logic

severity = classify_anomaly(row, z_score)

if should_alert(row['metric_name'], severity):

anomalies.append({

'date': row['date'],

'metric': row['metric_name'],

'severity': severity

})

return anomalies

SQL does what it's good at (aggregations, window functions). Python does what it's good at (complex conditional logic, integration with alerting systems).

SQL to filter and join → Python for complex logic → SQL for final aggregation

-- Step 1: SQL preprocessing

-- Narrow down to relevant data

CREATE TEMP TABLE relevant_orders AS

SELECT

o.order_id,

o.user_id,

o.items,

u.segment,

u.lifetime_value

FROM orders o

JOIN users u ON o.user_id = u.user_id

WHERE o.created_at >= CURRENT_DATE() - 7

AND o.status = 'completed'

AND u.segment IN ('vip', 'frequent_buyer')

# Step 2: Python processing

# Complex product recommendation logic

def generate_recommendations(orders_df):

recommendations = []

for idx, row in orders_df.iterrows():

# Complex algorithm considering:

# - Purchase history

# - Collaborative filtering

# - Inventory levels (API call)

# - Personalization rules

recs = recommendation_engine.predict(

user_id=row['user_id'],

context=row['items'],

segment=row['segment']

)

recommendations.extend(recs)

return pd.DataFrame(recommendations)

-- Step 3: SQL aggregation

-- Roll up results for reporting

INSERT INTO daily_recommendation_stats

SELECT

date,

product_id,

COUNT(*) as recommendation_count,

COUNT(DISTINCT user_id) as user_count,

SUM(CASE WHEN clicked THEN 1 ELSE 0 END) as clicks

FROM recommendations

GROUP BY 1, 2

Each layer does what it does best.

Use Python to generate SQL for repetitive patterns.

def generate_daily_aggregation(table_name, metrics):

"""Generate SQL for daily metric aggregation"""

metric_clauses = []

for metric in metrics:

if metric['type'] == 'count':

clause = f"COUNTIF({metric['condition']}) as {metric['name']}"

elif metric['type'] == 'sum':

clause = f"SUM({metric['expression']}) as {metric['name']}"

elif metric['type'] == 'avg':

clause = f"AVG({metric['expression']}) as {metric['name']}"

metric_clauses.append(clause)

sql = f"""

INSERT INTO {table_name}_daily

SELECT

DATE(timestamp) as date,

user_id,

{',\n '.join(metric_clauses)}

FROM {table_name}

WHERE DATE(timestamp) = CURRENT_DATE()

GROUP BY 1, 2

"""

return sql

# Define metrics in config

metrics = [

{'name': 'sessions', 'type': 'count', 'condition': "event_name = 'session_start'"},

{'name': 'purchases', 'type': 'count', 'condition': "event_name = 'purchase'"},

{'name': 'revenue', 'type': 'sum', 'expression': 'transaction_value'}

]

# Generate and execute SQL

sql = generate_daily_aggregation('events', metrics)

This is safer than dynamic SQL and more maintainable than copy-pasting similar queries.

Here's something people don't talk about enough: the right tooling makes this decision easier.

If you're running SQL in one place, Python in another, deploying separately, monitoring separately - you're going to default to using one tool for everything. The friction of using both is too high.

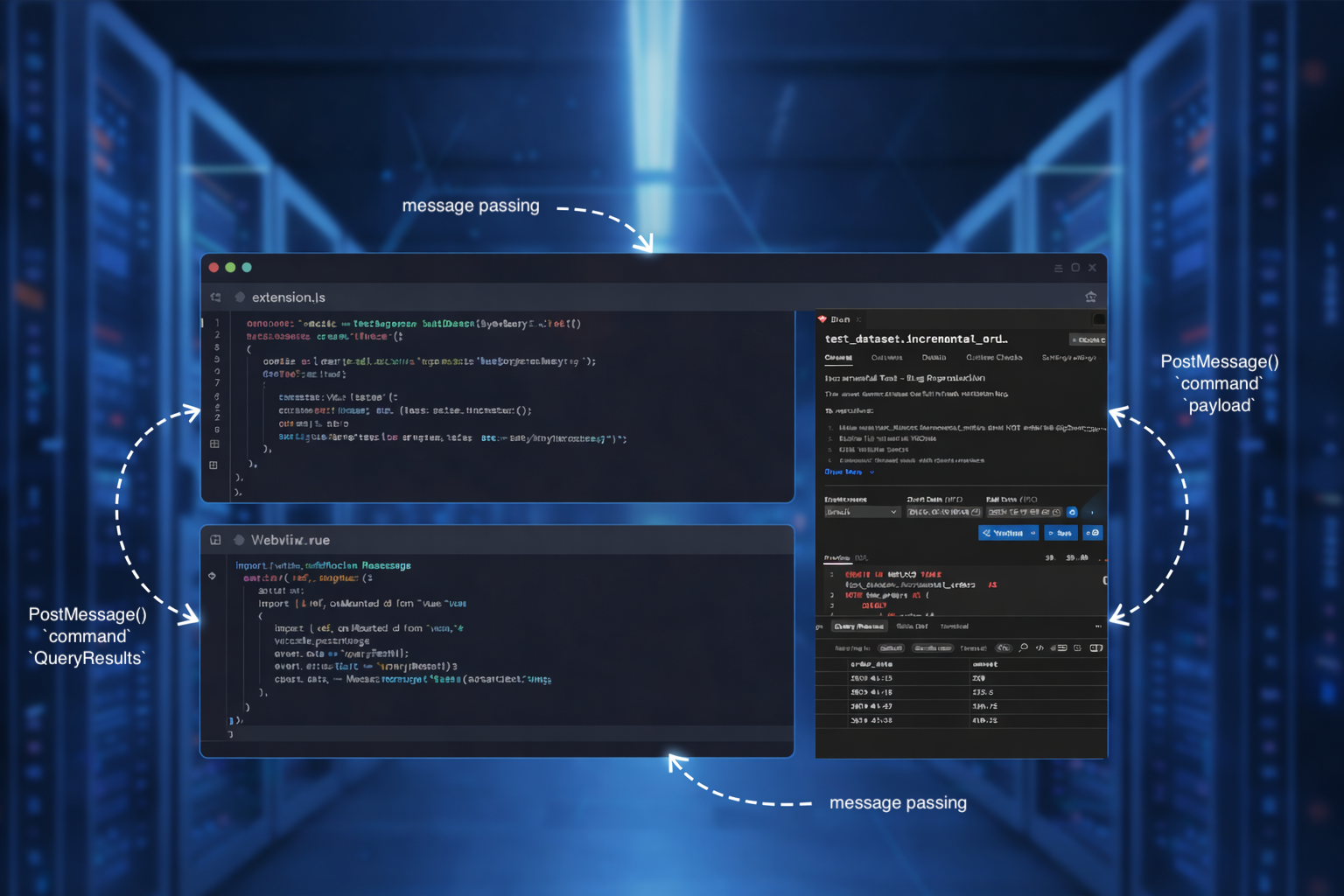

This is why we built Bruin to run both SQL and Python in the same pipeline, with the same tooling:

# pipeline.yml

assets:

- name: daily_aggregations

type: bq.sql

materialization:

type: table

- name: anomaly_detection

type: python

depends:

- daily_aggregations

- name: final_metrics

type: bq.sql

depends:

- anomaly_detection

When both tools are equally easy to use:

- You choose based on requirements, not friction

- Handoffs between SQL and Python are clean

- Everything is version controlled together

- Testing works the same way

- Deployment is unified

If your current setup makes it painful to use both, you'll keep forcing everything into one tool. That's when you end up with 500-line Python scripts doing GROUP BYs or 1,000-line SQL files implementing procedural logic.

Good tooling removes the excuse.

Here are some common patterns that can lead to problems:

Comfort is valuable, but processing 10GB in pandas when a SQL query would take 30 seconds can be inefficient and costly.

Consider learning enough SQL to recognize when it's the better tool.

While SQL expertise is valuable, some tasks like implementing recommendation engines are more naturally suited to procedural languages.

Python is indeed more flexible. However, it can be significantly slower for aggregations, use more memory, and require more code.

Flexibility isn't always the most important factor. Sometimes the constraints of SQL lead to better solutions.

-- DON'T DO THIS

SELECT

user_id,

my_python_udf(event_data) as processed_data

FROM events

This approach combines the limitations of both:

- Loses SQL's set-based optimization

- Adds Python's performance overhead

- Creates deployment complexity

- Makes debugging more difficult

If you need Python logic, consider doing it in a separate step.

Making assumptions about performance without measuring can lead to suboptimal choices.

It's worth running benchmarks to validate your assumptions before committing to an approach.

If you're considering changing how your team approaches this, here are some suggestions:

Look for these red flags:

Python doing SQL's job:

- Large dataframe operations

- Group-bys and aggregations in pandas

- Loading entire tables into memory

SQL doing Python's job:

- Nested CASE statements 10+ levels deep

- "Creative" SQL trying to implement loops

- Undocumented 500+ line queries

Rather than rewriting everything, consider starting with the most problematic pipeline:

- The one that breaks most often

- The one that's hardest to maintain

- The one with the highest operational costs

Rewrite that one using the appropriate tool. Measure the improvement and share the results with your team.

If your team only knows one language, they'll only use one language.

Invest in learning:

- Python engineers: Learn window functions, CTEs, and optimization

- SQL engineers: Learn pandas, API calls, and procedural patterns

You don't need experts. You need people who can recognize when to switch tools.

Remove the friction. If Python requires a complex deployment process but SQL is one click, everyone will use SQL.

Use tools that make both first-class citizens.

Based on experience with many teams, here are some principles that tend to work well:

The best data engineers are bilingual. They understand both SQL and Python, and they choose thoughtfully between them.

Start with SQL unless you have a specific reason not to. For data transformation, SQL is often a good default. Consider whether Python adds enough value to justify the switch.

Profile and benchmark - don't assume. Measure performance rather than relying on assumptions.

Make it easy to use both. Tool friction can drive suboptimal decisions. When your platform makes both SQL and Python easy to use, you're more likely to make the right choice.

Optimize for maintainability and performance. Consider the long-term implications of your tool choice, not just immediate comfort.

The Python vs SQL debate often presents a false choice.

The key question isn't "which is better?" but rather "which is right for this specific transformation?"

Teams sometimes default to the tool they know best, rather than the tool best suited to the task. This can lead to performance issues, higher costs, and maintenance challenges.

A better approach is to:

- Understand what each tool does well

- Recognize the patterns that favor one over the other

- Build competency in both to make informed choices

- Use tooling that makes both options accessible

Your data platform should make it easy to use SQL when SQL is appropriate and Python when Python is appropriate, without unnecessary friction.

This is one of the goals we had when building Bruin.

Consider experimenting with your next data transformation by implementing it both ways.

Write it in SQL. Write it in Python. Compare:

- Development time

- Execution time

- Code maintainability

- Performance at scale

This hands-on comparison can be illuminating.

If you're interested in how Bruin enables running both SQL and Python in the same pipeline with unified tooling, you can check out our docs or join our Slack community to discuss your specific use cases.

The best pipeline uses the right tool for each job.