The Pains of Data Ingestion

One of the first issues companies run into when it comes to analyzing their data is having to move the data off of their production databases:

- The production instances have different abilities that are not suitable for analytical workloads, such as row-oriented vs column-oriented databases.

- Analytical use-cases have very different SLAs and requirements than production applications, which means their reliability needs are very different. An analytical query may take 1.2s instead of 400ms and that would be fine, whereas that latency could be a huge disruption to the user experience if it happened on the production database.

- Online Transaction Processing (OLTP) databases are focused on transactional use-cases, which means they lack quite a few features around data analysis.

Due to these contributing factors, companies usually move their data off to an analytical database such as Google BigQuery or Snowflake after a certain size and scale for analytical purposes.

While moving the data to a database that is fit for purpose sounds good, it has its own challenges:

- The data needs to be copied over at a regular cadence via some tool/custom code. This means extra effort and cost to build and maintain.

- The data is now duplicated across multiple databases, meaning that changes in the source need to be accurately reflected in the analytical database.

- The assumptions around the atomicity/reliability of the data change since there are multiple places where the data resides now.

- After a certain data size, transferring the data becomes expensive and slow, requiring further engineering investment to make the process more efficient.

All of these reasons build up the problems around data ingestion, and there are already a bunch of tools in the market that aim to solve this.

The moment the data ingestion/copy problem is acknowledged, the first reaction across many teams is to build a tool that does the ingestion for them, and then schedule it via cronjobs or more advanced solutions. The problem sounds simple on the surface:

- Download a copy of the original data

- Upload it to the destination database either via SQL

insert statements, or some other platform-specific way to load the data into the database

However, this on-the-surface analysis forgets quite a few crucial questions:

- How do you ensure the data is copied over accurately?

- What happens when there's a bug that requires copying the data again?

- What if the data does not fit into the memory at once? How do you paginate?

- What happens as the data grows and copying/overwriting everything becomes too slow/expensive?

- What happens when the schema of the data changes?

- How does the team know about failures?

- Where do you deploy the logic?

- and quite a few more…

As you can see, there are many open points and they all require a solid understanding of the problem at hand, along with the investment to make the overall initiative success. Otherwise, the engineering team builds quick hacks to get them up and running, and these "hacks" start to become the backbone of the analytical use-cases, making it very hard, if not impossible, to evolve the architecture as the business evolves.

Some smart people saw the problem at hand and came up with various solutions to make this process easier.

Over the years some teams have decided that data ingestion can be performed simply via UI-driven solutions that have pre-built connectors across various platforms, which means non-technical people can also ingest data. Two major players that come to mind are Fivetran and Airbyte, both giant companies trying to tackle the long-tail of the data ingestion problem.

Even though there are a few differences between these no-code platforms, their primary approach is that you use their UI to set up connectors, and you forget about the problem without needing any technical person, e.g. a marketing person can set up a data ingestion task from Postgres to BigQuery.

While these tools do have a great deal of convenience, they still pose some challenges:

- The movement of the data is a fairly technical work that has quite a few questions about the ways of copying the data, and the data work rarely ends after just copying the data, therefore technical people such as data analysts, scientists, or engineers still need to be involved in the process, which means that the actual audience is the data people rather than non-technical folks.

- The UI-driven workflow causes lock-in, which means that the company is not going to be able to move away from these platforms until they build a replacement, which usually means further investment later on without disrupting the current ways of working + migrating the existing usecases.

- For the open-source solutions such as Airbyte, they still need to be hosted and maintained internally, which means engineering time and effort & infrastructure costs.

All in all, while the UI-driven data ingestion tools like Fivteran or Airbyte allow teams to get going from zero, there are still some issues that causes teams to stay away from them and resort to writing code due to the flexibility it provides.

There has been an emerging open-source Python library from the company dltHub called dlt, which focuses more on the use cases where there'll still be code written to ingest the data, but the code could actually be a lot smaller and maintainable. dlt has built-in open-source connectors, but it also allows custom sources & destinations to be built by teams for their specific needs. It is flexible but allows quick iteration when it comes to ingestion.

There are a couple of things dlt takes care of very nicely:

- dlt supports schema evolution, meaning that when the schema of an upstream dataset changes, dbt will make sure the destination tables are updated accordingly based on the changes.

- dlt supports custom sources and destinations, meaning that their prebuilt sources & destinations can be combined with custom ones.

- dlt has support for incremental updates and deduplication, which means that the data can be incrementally updated with only the changed data while ensuring it still matches the source data.

dlt is quite a powerful library and has a very vibrant, growing community. It might be the perfect companion when it comes to engineers wanting to write code for certain custom requirements.

However, we felt that there might be a middle ground for simpler use-cases that doesn't require coding, but also doesn't lock us into a UI-driven workflow.

While we like dlt a lot at Bruin, we felt that there were quite a few simpler scenarios that we couldn't justify writing, maintaining, and deploying code for:

- Some of our customers wanted to be able to simply copy a source table to a destination, and override the data in the destination because the data was small enough.

- Some others required fixed incremental strategies, such as "just get the latest data based on the

updated_at column. - Some others needed to be able to merge the new records with the old ones incrementally.

While all of these are possible with dlt, it requires these people to write code and figure out a way to deploy them and monitor them. It is not incredibly hard, but it is also not trivial. Seeing all these patterns, we have decided to take a stab at the problem in an open-source fashion.

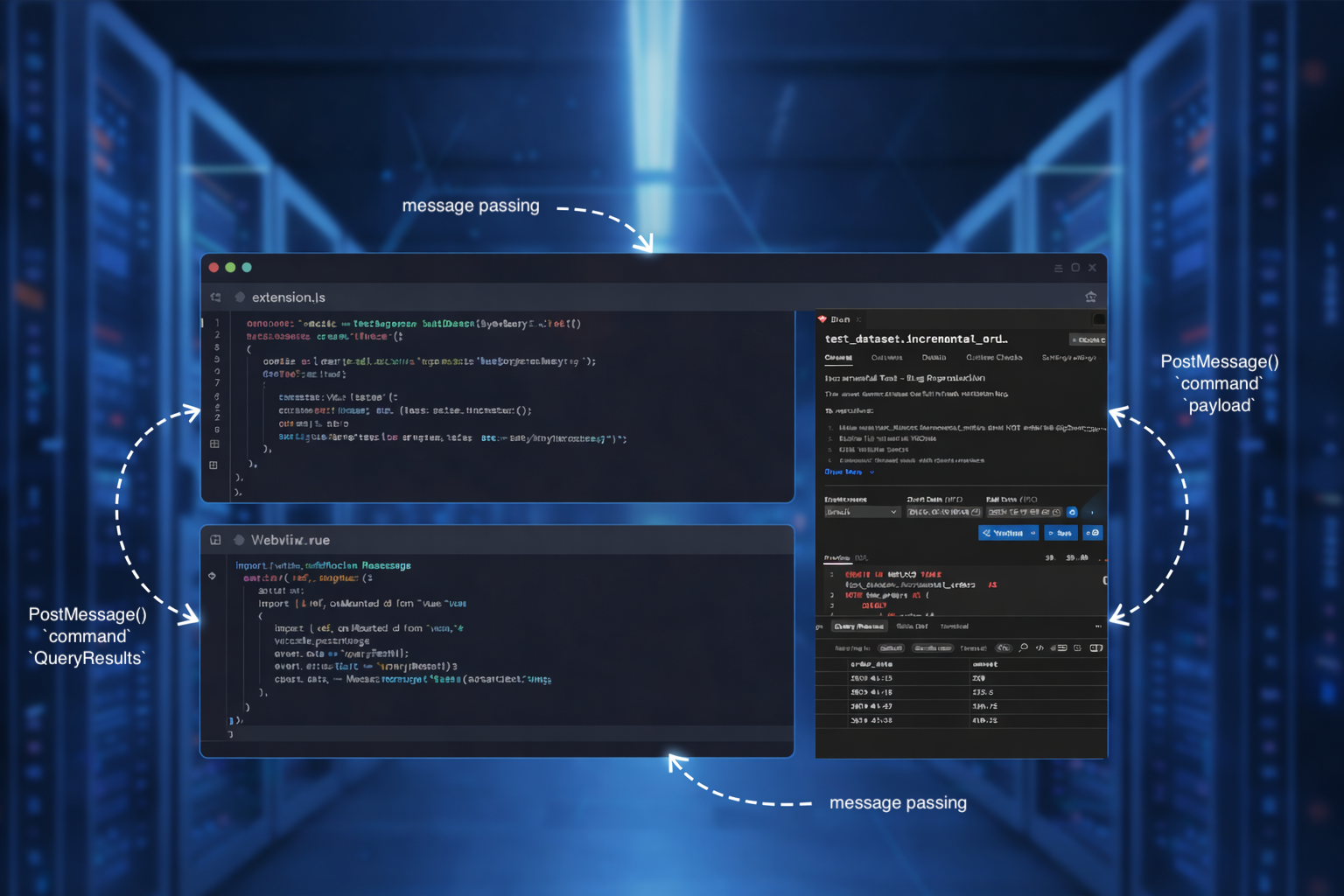

ingestr is a command-line application that allows you to ingest data from any source into any destination using simple command-line flags, without having to write any code and still having it as part of your tech stack.

- ✨ copy data from your Postgres / MySQL / SQL Server or any other source into any destination, such as BigQuery or Snowflake

- ➕ incremental loading

- 🐍 single-command installation:

pip install ingestr

ingestr takes away the complexity of managing any backend or writing any code for ingesting data, simply run the command and watch the magic

.

ingestr makes a couple of opinionated decisions about how the ingestion should work:

- it is built as an open-source solution, and anyone can use it for any purpose based on its permissive license

- ingestr treats everything as a URI: every source and every destination has its own URI based on SQLAlchemy connection URIs.

- ingestr has a few built-in incremental loading strategies:

replace, append, merge and delete+insert

While there will be quite a few scenarios where the teams would benefit from the flexibility dlt provides, we believe that 80% of the real-life scenarios out there would fall into these presets, and for those ingestr could simplify things quite a bit.

🌟 Give it a look and give us a star on GitHub! We'd love to hear your feedback and feel free to join our Slack community here.